Unlock the Power of Real-time Data Processing with Databricks and Google Cloud

We are excited to announce the official launch of the Google Pub/Sub connector for the Databricks Lakehouse Platform. This new connector adds to an extensive ecosystem of external data source connectors enabling you to effortlessly subscribe to Google Pub/Sub directly from Databricks to process and analyze data in real time.

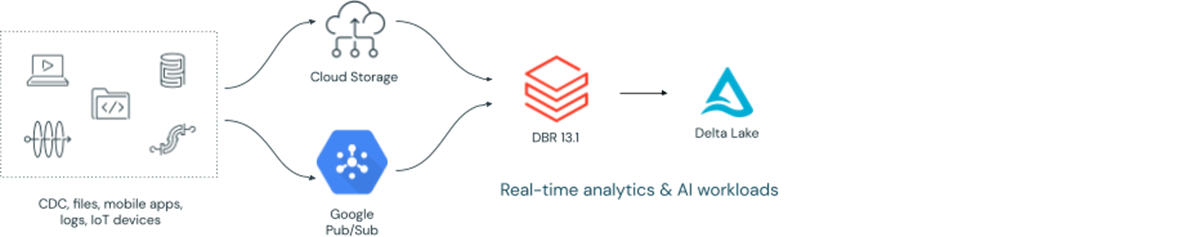

With the Google Pub/Sub connector you can easily tap into the wealth of real-time data flowing through Pub/Sub topics. Whether it's streaming data from IoT devices, user interactions, or application logs, the ability to subscribe to Pub/Sub streams opens up a world of possibilities for your real-time analytics, and machine learning use cases:

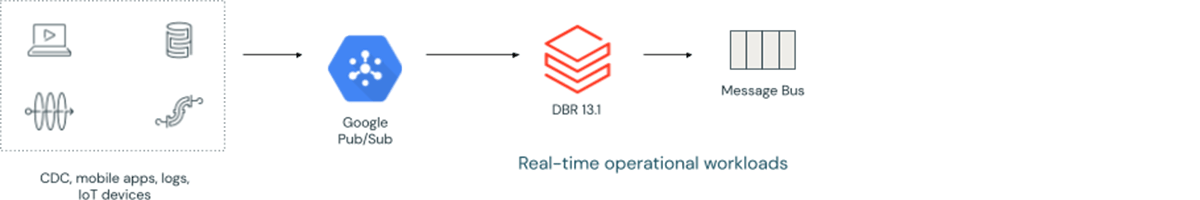

You can also use the Pub/Sub connector to drive low latency operational use cases fueled with real-time data from Google Cloud:

In this blog, we'll discuss the key benefits of the Google Pub/Sub connector coupled with Structured Streaming on the Databricks Lakehouse Platform.

Exactly-Once Processing Semantics

Data integrity is critical when processing streaming data. The Google Pub/Sub connector in Databricks ensures exactly-once processing semantics, such that every record is processed without duplicates (on the subscribe side) or data loss, and when combined with a Delta Lake sink you get exactly-once delivery for the entire data pipeline. This means you can trust your data pipelines to operate reliably, accurately, and with low latency, even when handling high volumes of real-time data.

Simple to Configure

Simplicity is critical to effectively enable data engineers to work with streaming data. That's why we designed the Google Pub/Sub connector with a user-friendly syntax allowing you to easily configure your connection with Spark Structured Streaming using Python or Scala. By providing subscription, topic, and project details, you can quickly establish a connection and start consuming your Pub/Sub data streams right away.

Code example:

Enhanced Data Security and Granular Access Control

Data security is a top priority for any organization. Databricks recommends using secrets to securely authorize your Google Pub/Sub connection. To establish a secure connection, the following options are required:

- clientID

- clientEmail

- privateKey

- privateKeyId

Once the secure connection is established, fine-tuning access control is essential for maintaining data privacy and control. The Pub/Sub connector supports role-based access control (RBAC), allowing you to grant specific permissions to different users or groups ensuring your data is accessed and processed only by authorized individuals. The table below describes the roles required for the configured credentials:

| Roles | Required / Optional | How it's used |

|---|---|---|

| roles/pubsub.viewer or roles/viewer | Required | Check if subscription exists and get subscription |

| roles/pubsub.subscriber | Required | Fetch data from a subscription |

| roles/pubsub.editor or roles/editor | Optional | Enables creation of a subscription if one doesn't exist and also enables use of the deleteSubscriptionOnStreamStop to delete subscriptions on stream termination |

Schema Matching

Gartner®: Databricks Cloud Database Leader

Knowing what records you've read and how they map to the DataFrame schema for the stream is very straight forward. The Google Pub/Sub connector matches the incoming data to simplify the overall development process. The DataFrame schema matches the records that are fetched from Pub/Sub, as follows:

| Field | Type |

|---|---|

| messageId | StringType |

| payload | ArrayType[ByteType] |

| attributes | StringType |

| publishTimestampInMillis | LongType |

NOTE: The record must contain either a non-empty payload field or at least one attribute.

Flexibility for Latency vs Cost

Spark Structured Streaming allows you to process data from your Google Pub/Sub sources incrementally while adding the option to control the trigger interval for each micro-batch. This gives you the greatest flexibility to control costs while ingesting and processing data at the frequency you need to maintain data freshness. The Trigger.AvailableNow() option consumes all the available records as an incremental batch while providing the option to configure batch size with options such as maxBytesPerTrigger (note that sizing options vary by data source). For more information on configuration options for Pub/Sub with incremental data processing in Spark Structured Streaming please refer to our product documentation.

Monitoring Your Streaming Metrics

To provide insights into your data streams, Spark Structured Streaming contains progress metrics. These metrics include the number of records fetched and ready to process, the size of those records, and the number of duplicates seen since the stream started. This enables you to track the progress and performance of your streaming jobs and tune them over time. The following is an example:

Customer Success

Xiaomi is a consumer electronics and smart manufacturing company with smartphones and smart hardware connected by an IoT platform at its core. It is one of the largest smartphone manufacturers in the world, the youngest member of the Fortune Global 500, and was one of our early preview customers for the Pub/Sub connector. Pan Zhang, Senior Staff Software Engineer in Xiaomi's International Internet Business Department, discusses the technical and business impact of the Pub/Sub connector on his team:

"We are thrilled about this connector as it revolutionizes the way we handle data ingestion and integration. Partnering with Databricks has significantly reduced the effort required in these critical areas. This collaboration has elevated our data capabilities, enabling us to stay at the forefront of technological advancements and drive innovation across our ecosystem. Furthermore, this collaboration has proven instrumental in reducing Time to Market (TTM) for Xiaomi international internet business when integrating with Google Cloud Platform (GCP) services. Pub/Sub connector has streamlined the process, enabling us to swiftly harness the power of GCP services and deliver innovative solutions to our customers. By accelerating the integration process, we can expedite the deployment of new features and enhancements, ultimately providing our users with a seamless and efficient experience."

Melexis is a Belgian microelectronics manufacturer that specializes in automotive sensors and advanced mixed-signal semiconductor solutions and is another one of our customers that has already seen early success with the Google Pub/Sub connector. David Van Hemelen, Data & Analytics Team Lead at Melexis, summarizes his team's satisfaction with the Pub/Sub connector and Databricks partnership at large:

"We wanted to stream and process large volumes of manufacturing data from our pub/sub setup into the Databricks platform so that we could leverage real-time insights for production monitoring, equipment efficiency analysis and expand our AI initiatives. The guidance provided by the Databricks team and the pub/sub connector itself exceeded our expectations in terms of functionality and performance making this a smooth and fast-paced implementation project."

Get Started Today

The Google Pub/Sub connector is available starting in Databricks Runtime 13.1. For more detail on how to get started, please refer to the "Subscribe to Google Pub/Sub" documentation.

At Databricks, we are constantly working to enhance our integrations and provide even more powerful data streaming capabilities to power your real-time analytics, AI, and operational applications from the unified Lakehouse Platform. We will also shortly announce support for Google Pub/Sub in Delta Live Tables so stay tuned for even more updates coming soon!

In the meantime, browse dozens of customer success stories around streaming on the Databricks Lakehouse Platform, or test-drive Databricks for free on your choice of cloud.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read