What’s new from

Data + AI Summit

Explore the latest breakthroughs in data and AI — from product launches to what’s coming nextLakebase

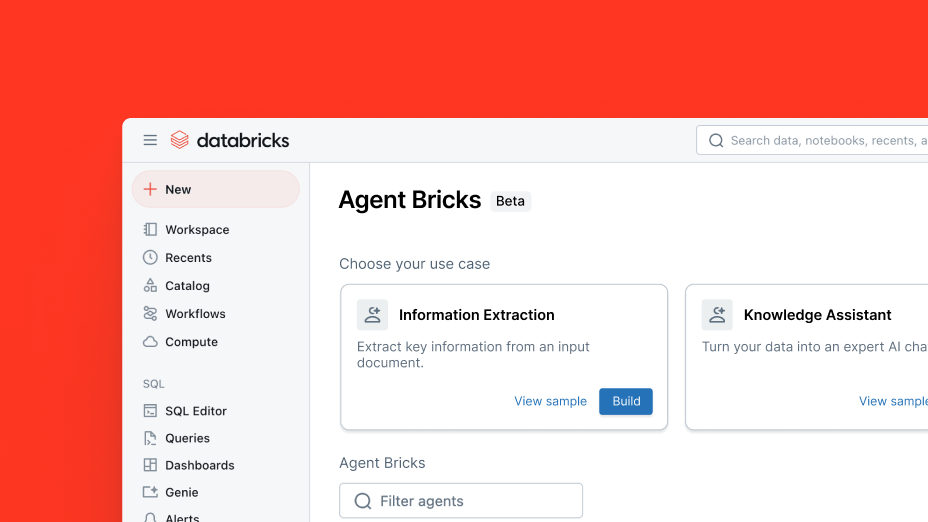

The first fully managed, Postgres-compatible transactional database engine designed for developers and AI agentsMosaic AI Agent Bricks

Production AI agents optimized on your dataLakeflow Designer

Production quality ETL. No code required.Explore more of the latest product launches

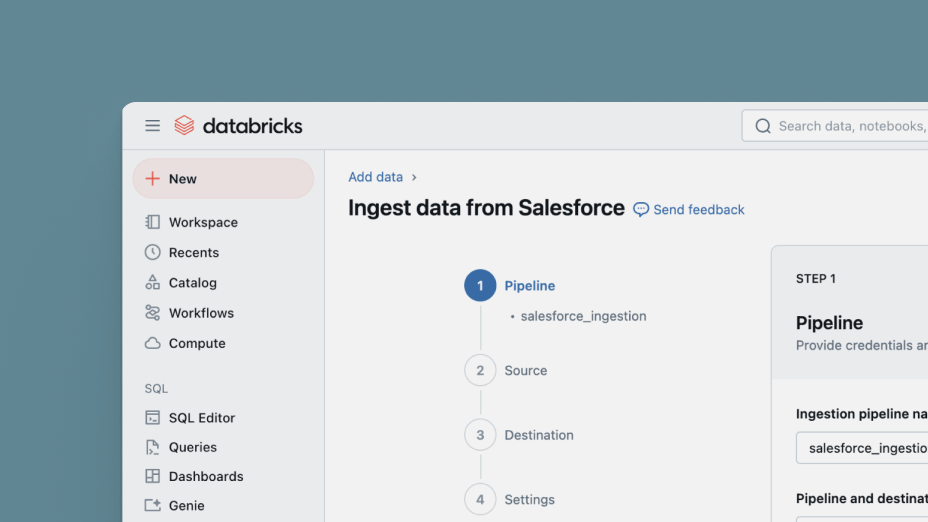

Lakeflow

Now in General Availability, Lakeflow is an end-to-end data engineering solution to build reliable data pipelines faster with all business-critical data. We’ve expanded ingestion capabilities and added a new declarative pipelines authoring experience.

Natively integrating Gemini Models

Build, deploy and scale AI agents to automate complex workflows using Google Gemini models directly on your data — securely and with unified governance — within Databricks.

Databricks Free Edition

Students and aspiring professionals will soon be able to develop critical skills in data and AI — for free. Ingest data, build dashboards and train AI models on the same platform trusted by more than 60% of the Fortune 500. Experimentation across the full extent of use cases will be open to all. Now in Public Preview.

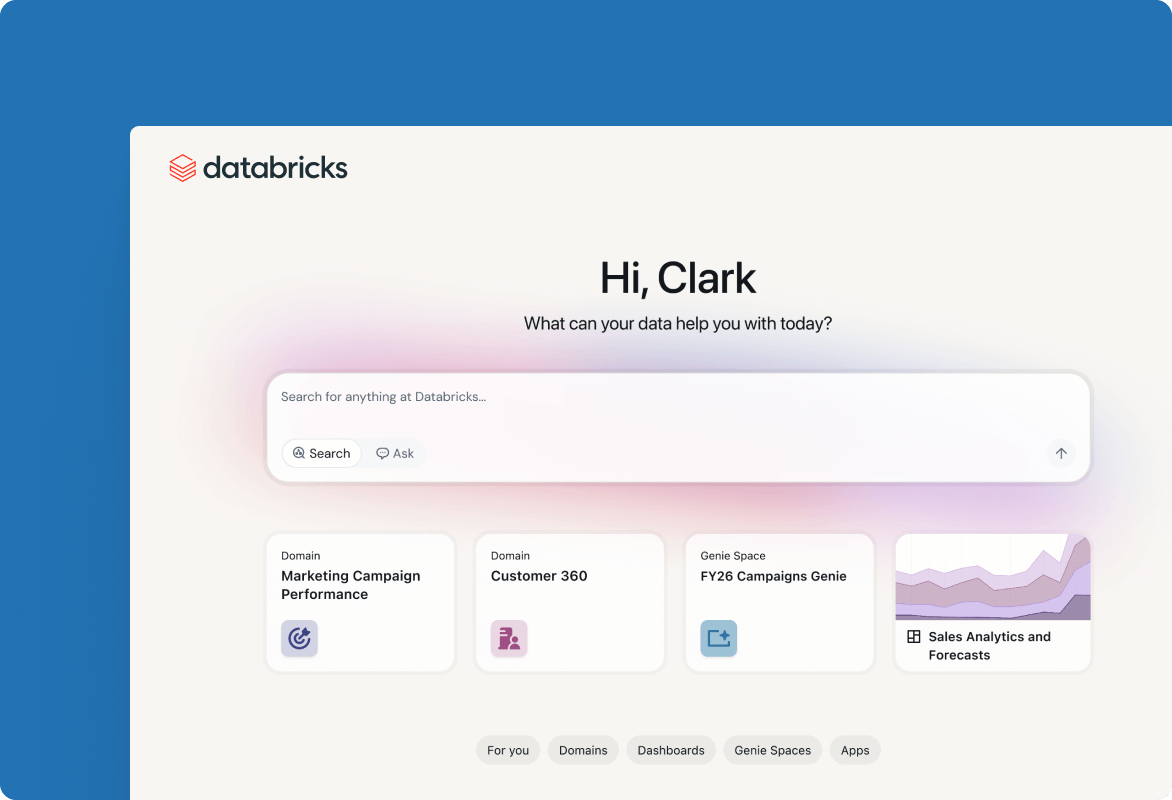

Databricks One

Business users will soon be able to interact with AI/BI Dashboards, ask their data questions in natural language through AI/BI Genie powered by deep research, quickly find relevant dashboards, and use custom-built Databricks Apps — all in an elegant, code-free environment. Now in Private Preview.

AI/BI Genie

Now in General Availability, AI/BI Genie allows users to ask questions in natural language and get instant insights from data — no coding required. Genie delivers answers via text summaries, tabular data and visualizations, along with how it arrived at the answer.

Coming soon: To answer complex questions, Genie Deep Research creates research plans and analyzes multiple hypotheses — complete with citations.

Unity Catalog

Unity Catalog is a unified governance solution for modern data, AI and business. Now it’s the first catalog to enable interoperability across compute engines, first-party support for multiple table formats and unified business semantics. Explore the new capabilities now in preview.

Databricks Apps

Build, deploy and scale interactive data intelligence apps within your fully governed and secure Databricks environment to rapidly deliver user-facing tools — from LLM copilots and data quality dashboards to team-specific ops apps — where your data and AI assets already live.

Databricks Clean Rooms

Now in General Availability on Google Cloud, Databricks Clean Rooms extends support for a truly comprehensive multicloud offering. Create a central clean room environment and collaborate with partners across AWS, Azure, GCP or any other data platform.

Lakebridge

Automate migration from legacy data warehouses to Databricks SQL — and speed up implementation by 2x. Setting a new standard for end-to-end migration, Lakebridge includes profiling, assessing, converting, validating and reconciling.

Open source innovation

Spark Declarative Pipelines

Define and execute data pipelines for both batch and streaming ETL workloads across any Apache SparkTM-supported data source — including cloud storage, message buses, change data feeds and external systems.

Data intelligence in action

Ready to become a data + AI company?

Take the first steps in your data transformation