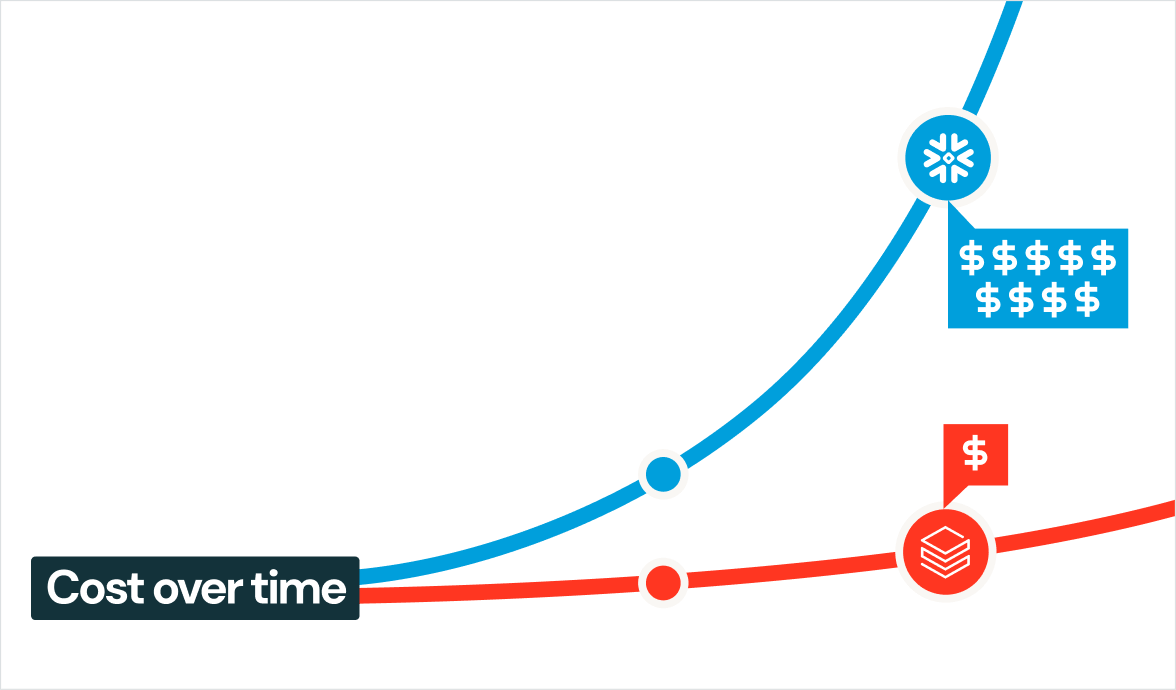

Databricks vs. Snowflake

Save more every year with the Databricks Data Intelligence Platform

Benefits

Lower TCO

Choose a cloud data warehouse for BI, ETL and AI/ML. ETL workloads typically account for 50% or more of an organization’s overall data costs. With a single, unified Data Intelligence Platform and built-in capabilities for BI and governance, Databricks delivers excellent value and savings across all of these use cases.

The rapid rise of LLMs and other AI applications is forcing companies to examine how to scale cost-efficiently with Databricks, and performance scales with your workloads. We continue to provide market-leading TCO, which holds at scale. You can dive deep into a Databricks and Snowflake performance test in this video.

The Databricks approach gives you ultimate flexibility. You can choose whether a warehouse is optimized for speed or for price. You can even bring your own cloud discounts when you use Databricks SQL Classic version.

Supporting capabilities include:

- Photon engine for fast query and performance at low cost

- Predictive optimization to optimize table data layouts, resulting in faster queries and cheaper storage

Zero lock-in

Databricks is also built on open formats, open standards, open source, an open data catalog and open data sharing. Combined with the Databricks open lakehouse architecture, you get zero lock-in for your data. You can choose the engine and format that work best for you, and you’re not locked into Databricks compute.

The componentized nature of the Databricks Platform also means that you’re not locked into every component when building your own data platform. You can customize based on your specific business priorities and enterprise architectures.

Supporting capabilities include:

- Full support for Delta and Apache Iceberg™ table formats

- ANSI-compliant SQL and open source in Apache Spark™

- Open data sharing with Delta Sharing

- Predictive optimization for all engines

- Unified governance for data warehousing, BI and AI/ML on an open data catalog with Unity Catalog

- AI functions that enable you to leverage foundation AI models directly in your data warehouse

- Performance isolation so consumers can query data through their own compute (SQL warehouse or cluster), avoiding contention with producers

Zero copy

Zero-copy data access with Unity Catalog eliminates the traditional trade-off between control and collaboration. Instead of duplicating data across warehouses, regions or teams, organizations define access policies once and share secure views of trusted data everywhere it’s needed. With this capability, you can enable the development of a centralized metrics platform — serving many dashboarding use cases across lines of business (LOBs) — all from a single governed source. This also helps you reduce redundant BI pipelines by exposing governed datasets directly through Unity Catalog, which allows business teams to self-serve analytics without maintaining copies. The zero-copy model simplifies governance, ensures consistency and dramatically reduces the cost and complexity of enterprise data sharing.

Supporting capabilities include:

- Centralized governance with Unity Catalog to centrally manage, govern, audit and track shared data on a single platform

- Cross-workspace and cross-region sharing with read access to Delta Lake tables

- Federated access control with Unity Catalog to maintain producer-consumer separation

- Support for BI tools and SQL warehouses so shared data can be queried directly via Databricks Lakehouse, Power BI, Tableau and other tools — without extracts or imports

- Native Delta Sharing integration to share governed data across clouds or external partners

- Efficient data loading with no data duplication, because data stays in the native cloud

- Cost efficiency from eliminating storage duplication and reducing compute waste by centralizing logic and minimizing data movement

Unified governance

Get unparalleled governance by using a single catalog for all formats and use cases. Databricks unifies governance with Unity Catalog, the industry’s only unified and open governance solution for data and AI. It empowers data scientists, analysts and engineers to securely discover, access and collaborate on trusted data and AI assets, enhancing productivity and adhering to regulatory compliance.

Unity Catalog enhances interoperability and simplifies data management processes for organizations that integrate diverse datasets and models across different environments, including cloud platforms and external databases.

Unity Catalog managed tables can also help you accelerate queries by up to 20x. This is achievable through features such as intelligent data skipping and in-memory caching of transaction metadata, which significantly enhance query planning performance. You can gain performance and reduce costs by over 50% with managed tables, which automate processes like clustering and statistic collection, thereby reducing manual overhead and storage costs.

Supporting capabilities include:

- Unified governance across all platforms with one catalog

- Access management and security

- Data lineage to show a comprehensive view of how data is transformed and flows

- Discovery and observability

- Open data accessibility

- Interoperability and collaboration

Intelligent analytics for everyone

Data architects and data analysts need to get information fast. With Databricks, you can derive insights from all of your data in one platform — no need to replicate data or manage access policies across multiple platforms. AI/BI is native to Databricks and unified with Databricks SQL and Unity Catalog. No separate licenses to procure or shadow data warehouse to manage. Now you can experience business intelligence with data intelligence. The agents driving AI/BI have deep knowledge of your enterprise data and your business semantics, ensuring you get accurate answers to natural language questions, tailored to your organization. Your data analysts now have smarter self-service capabilities as well. Through a conversational interface, AI/BI Genie gives users answers to natural language questions while reducing their reliance on expert practitioners.

Now, your real-time insights are made simple with streaming data, so you can immediately improve the accuracy and actionability of your business intelligence. You can also seamlessly publish datasets directly to your favorite BI tools (Power BI, Tableau, Looker, Excel, Google Sheets, Sigma, Qlick, ThoughtSpot and more) without managing ODBC/JDBC connections.

Supporting capabilities include:

- AI/BI Dashboards for AI-assisted visual insights

- AI/BI Genie to ask data questions in natural language

- Streaming data pipelines with Lakeflow Spark Declarative Pipelines

- Integrated BI tools so you can publish to them directly from your data warehouse

- Low latency to manage incremental data refreshes

Advanced AI/ML

Data engineers and data scientists need to work hand in hand so the right data is prepared properly for the right models. Databricks provides a unified platform for both data engineering and machine learning, supporting a variety of data types (including unstructured data) and real-time processing. Databricks also helps you leverage a wide variety of AI models and provides cost-effective inference solutions.

Supporting capabilities include:

- MLflow

- Real-time AI inference use cases

- Run distributed AI/ML workloads and notebooks

- LLMOps features for evaluating and monitoring GenAI use cases

Operational and analytical data together

Databricks Lakebase helps address operational and analytical fragmentation by introducing an operational database that runs alongside your existing analytics workflows in the Databricks Data Intelligence Platform. Built on open source PostgreSQL with separated compute and storage, Lakebase offers fully managed transactional capabilities, including low-latency inserts, updates, deletes and fast point lookups, and it’s integrated with Delta Lake and Unity Catalog. This opens the door for real-time applications to run at scale, side by side with dashboards and ML models without data duplication.

By eliminating the latency and operational overhead of syncing online transaction processing (OLTP) data into the data warehouse, Lakebase helps modernize transactional workloads for the AI era. Teams can power apps, APIs and real-time decision engines using the same governed datasets trusted by business analysts and data scientists. It’s a foundational shift in the modern data landscape.

Lakebase helps unify operational and analytical use cases by minimizing the friction between databases and the lakehouse. With native support for syncing Delta tables to and from Lakebase, teams can build applications that interact with the same datasets used for analytics and AI, without relying on custom reverse ETL pipelines.

Perspectives from leading systems integrators

Snowflake to Databricks Migration Guide

Implementing machine learning on Snowflake requires managing and operating additional tools if you go beyond simple AI/ML use cases. Over time, your architecture will become more complex. ETL costs will increase, too. With the Databricks Data Intelligence Platform, you get high-performing, cost-effective ETL and native support for AI.

Download this migration guide to learn:

- Five critical phases of your migration project

- Best practices to scale your lakehouse

- Resources to help with your migration journey