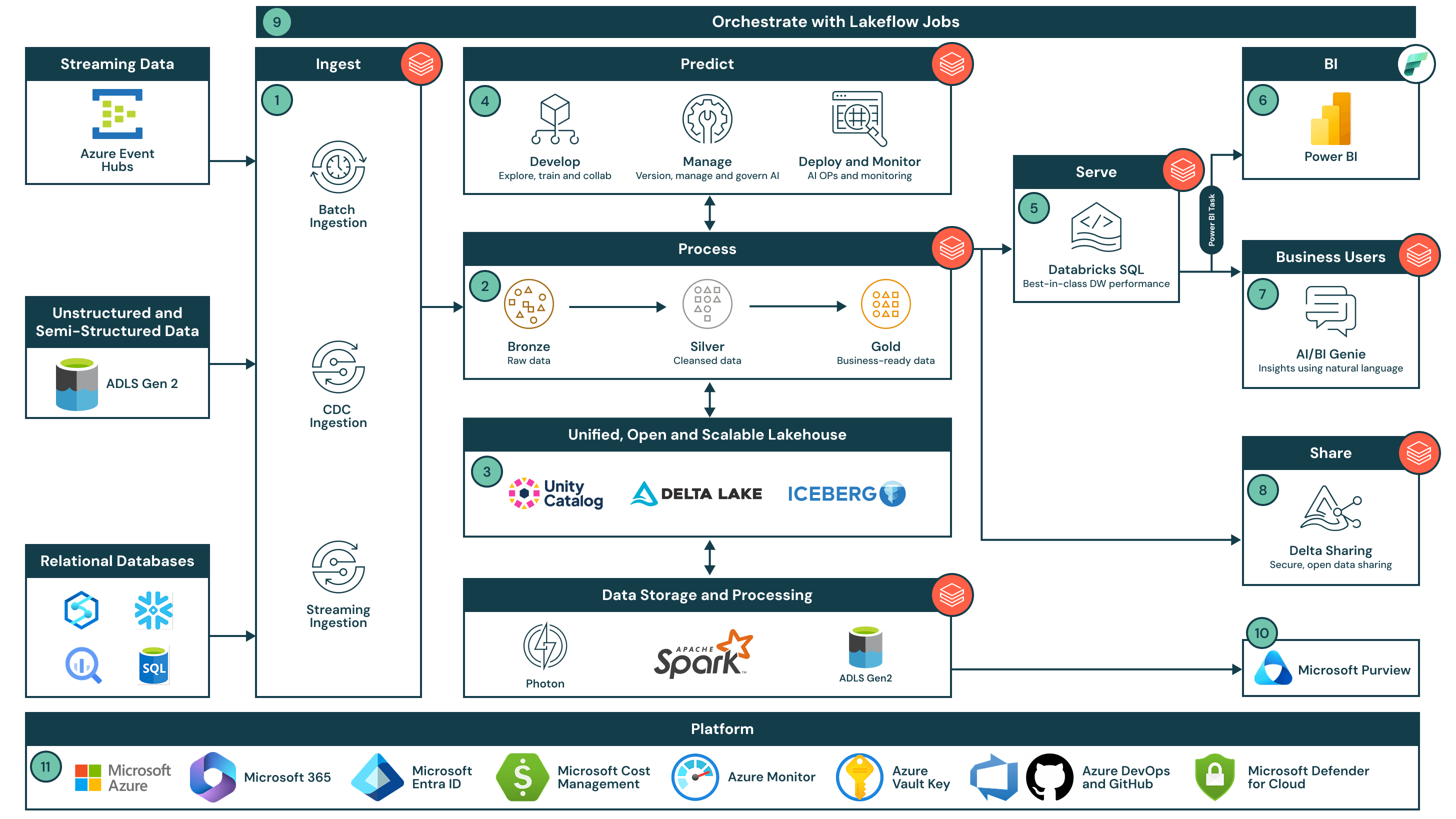

Data Intelligence End-to-End Architecture with Azure Databricks

The data intelligence end-to-end architecture provides a scalable, secure foundation for analytics, AI and real-time insights across both batch and streaming data.

Architecture summary

The data intelligence end-to-end architecture seamlessly integrates with Power BI and Copilot in Microsoft Fabric, Microsoft Purview, Azure Data Lake Storage Gen2 and Azure Event Hubs, empowering data-driven decision-making across the enterprise. This solution demonstrates how you can leverage the Data Intelligence Platform for Azure Databricks combined with Power BI to democratize data and AI while meeting the needs for enterprise-grade security and scale. Starting with an open, unified lakehouse architecture, governed by Unity Catalog, the data intelligence leverages an organization’s unique data to provide a simple, robust, and accessible solution for ETL, data warehousing and AI so they can deliver data products quicker and easier.

Use cases

This end-to-end architecture can be used to:

- Modernize a legacy data architecture by combining ETL, data warehousing and AI to create a simpler and future-proof platform

- Power real-time analytics use cases such as e-commerce recommendations, predictive maintenance and supply chain optimization at scale

- Build production-grade GenAI applications such as AI-driven customer service agents, personalization and document automation

- Empower business leaders within an organization to gain insights from their data without a deep technical skillset or custom-built dashboards

- Securely share or monetize data with partners and customers

Dataflow

- Data ingestion

- Stream data from Azure Event Hubs into Lakeflow Spark Declarative Pipelines, with schema enforcement and governance via Unity Catalog

- Use Auto Loader to incrementally ingest unstructured and semi-structured data from ADLS Gen2 into Delta Lake

- Access external relational systems using Lakehouse Federation, ensuring all sources follow the same governance model

- Process both batch and streaming data at scale using Lakeflow Spark Declarative Pipelines and the Photon engine, following the medallion architecture.

- Bronze: Raw batch and streaming data ingested as is for retention and auditability

- Silver: Cleansed and joined datasets — streaming and batch logic are declaratively defined to simplify complexity

- Gold: Aggregated, business-ready data designed for consumption by downstream analytics and AI systems

- This unified approach allows teams to build resilient pipelines that support real-time and historical data processing in the same architecture

- Store all data in an open, interoperable format using Delta Lake on ADLS Gen2.

Enable compatibility across engines like Delta, Apache Iceberg™ and Hudi while centralizing storage in a secure, scalable environment. - Explore, enrich and train AI models using collaborative notebooks and governed ML tooling.

Use serverless notebooks for exploration and model training, with MLflow, feature store and Unity Catalog managing models, features and vector indexes. - Serve ad hoc and high-concurrency queries directly from your data lake using Databricks SQL.

Provide fast, cost-efficient access to Gold-level data without needing to move or duplicate data. - Visualize business-ready data in Power BI using semantic models connected to Unity Catalog.

Build reports in Microsoft Fabric with live connections to governed data via Databricks SQL. - Let business users explore data using natural language with AI/BI Genie.

Democratize data access by enabling anyone to query data conversationally without writing SQL. - Share live, governed data externally using Delta Sharing.

Use open standards to securely distribute data with partners, customers or other business units. - Orchestrate data and AI workflows across the platform using Databricks Jobs.

Manage dependencies, scheduling and execution from a single pane of glass across your pipelines and ML jobs. - Publish metadata to Microsoft Purview for unified data discovery and governance.

Extend your governance reach by syncing Unity Catalog metadata for enterprise-wide visibility. - Leverage core Azure services for platform governance.

- Identity management and single sign-on (SSO) via Microsoft Entra ID

- Manage costs and billing via Microsoft Cost Management

- Monitor telemetry and system health via Azure Monitor

- Manage encrypted keys and secrets via Azure Key Vault

- Facilitate version control and CI/CD via Azure DevOps and GitHub

- Ensure cloud security management via Microsoft Defender for Cloud