Skip to main content![The Databricks AI Research Team]()

![MemAlign OG image]()

![Instructed Retriever]()

![OfficeQA: A grounded reasoning benchmark for testing enterprise AI]()

![ai_parse_document]()

![]()

![Judging with Confidence: Meet PGRM, the Promptable Reward Model]()

![Agent Learning from Human Feedback (ALHF): A Databricks Knowledge Assistant Case Study]()

![The power of RLVR: Training a Leading SQL Reasoning Model on Databricks]()

![Test-time Adaptive Optimization (TAO)]()

The Databricks AI Research Team

The Databricks AI Research Team's posts

AI Research

February 3, 2026/9 min read

MemAlign: Building Better LLM Judges From Human Feedback With Scalable Memory

AI Research

January 6, 2026/11 min read

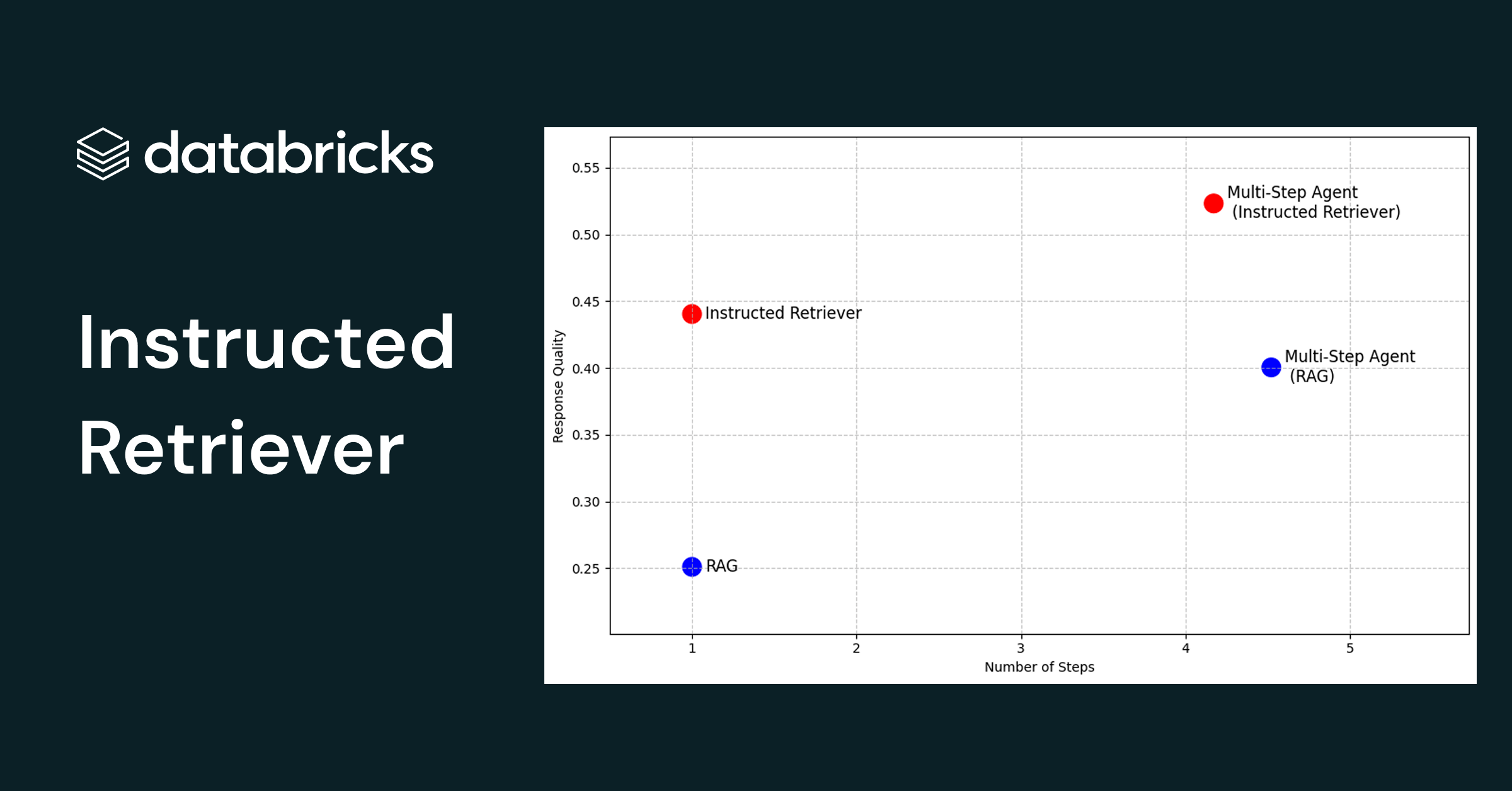

Instructed Retriever: Unlocking System-Level Reasoning in Search Agents

AI Research

December 9, 2025/12 min read

Introducing OfficeQA: A Benchmark for End-to-End Grounded Reasoning

Product

November 11, 2025/5 min read

PDFs to Production: Announcing state-of-the-art document intelligence on Databricks

AI Research

September 24, 2025/12 min read

Building State-of-the-Art Enterprise Agents 90x Cheaper with Automated Prompt Optimization

AI Research

August 12, 2025/10 min read

Judging with Confidence: Meet PGRM, the Promptable Reward Model

AI Research

August 4, 2025/7 min read

Agent Learning from Human Feedback (ALHF): A Databricks Knowledge Assistant Case Study

AI Research

July 30, 2025/4 min read

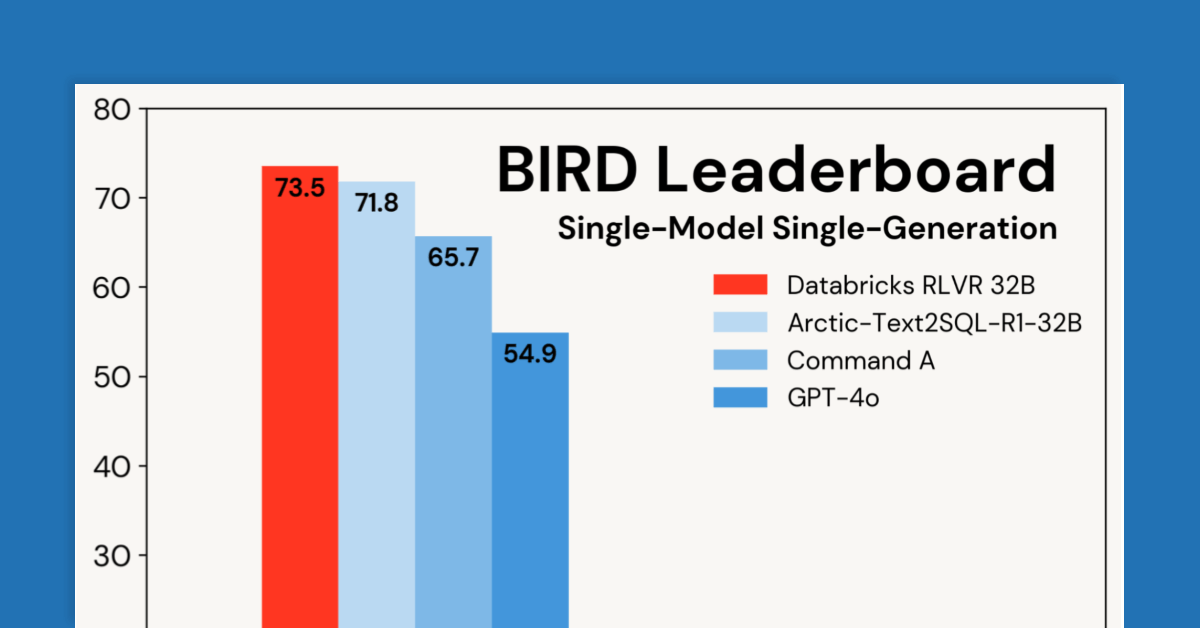

The Power of RLVR: Training a Leading SQL Reasoning Model on Databricks

AI Research

March 25, 2025/8 min read

TAO: Using test-time compute to train efficient LLMs without labeled data

Showing 1 - 12 of 15 results