In this blog, we dive into Agent Learning from Human Feedback (ALHF) — a new machine learning paradigm where agents learn directly from minimal natural language feedback, not just numeric rewards or static labels. This unlocks faster, more intuitive agent adaptation for enterprise applications, where expectations are often specialized and hard to formalize.

ALHF powers the Databricks Agent Bricks product. In our case study, we look at Agent Bricks Knowledge Assistant (KA) - which continually improves its responses through expert feedback. As shown in Figure 1, ALHF drastically boosts the overall answer quality on Databricks DocsQA with as few as 4 feedback records. With just 32 feedback records, we more than quadruple the answer quality over the static baselines. Our case study demonstrates the efficacy of ALHF and opens up a compelling new direction for agent research.

The Promise of Teachable AI Agents

In working with enterprise customers of Databricks, a key challenge we’ve seen is that many enterprise AI use cases depend on highly specialized internal business logic, proprietary data, and intrinsic expectations, which are not known externally (see our Domain Intelligence Benchmark to learn more). Therefore, even the most advanced systems still need substantial tuning to meet the quality threshold of enterprise use cases.

To tune these systems, existing approaches rely on either explicit ground truth outputs, which are expensive to collect, or reward models, which only give binary/scalar signals. In order to solve these challenges, we describe Agent Learning from Human Feedback (ALHF), a learning paradigm where an agent adapts its behavior by incorporating a small amount of natural language feedback from experts. This paradigm offers a natural, cost-effective channel for human interaction and enables the system to learn from rich expectation signals.

Example

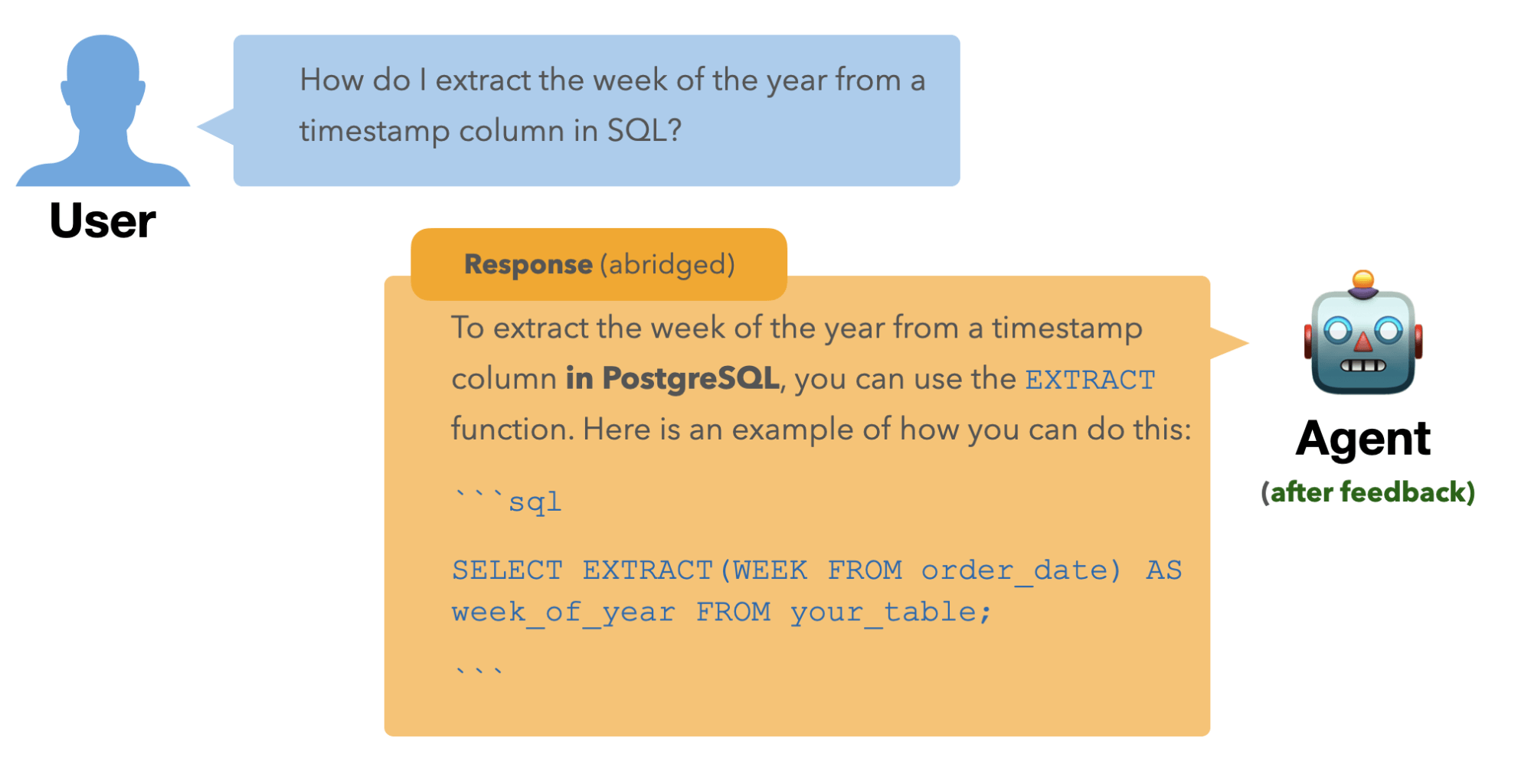

Let’s say we create a Question Answering (QA) agent to answer questions for a hosted database company. Here’s an example question:

The agent suggested using the function weekofyear(), supported in multiple flavors of SQL (MySQL, MariaDB, etc.). This answer is correct in that when used appropriately, weekofyear() does achieve the desired functionality. However, it isn’t supported in PostgreSQL, the SQL flavor preferred by our user group. Our Subject Matter Expert (SME) can provide natural language feedback on the response to communicate this expectation as above, and the agent will adapt accordingly:

ALHF adapts the system responses not only for this single question, but also for questions in future conversations where the feedback is relevant, for example:

As this example shows, ALHF gives developers and SMEs a frictionless and intuitive way to steer an agent’s behavior using natural language — aligning it with their expectations.

ALHF in Agent Bricks

We’ll use one specific use case of the Agent Bricks product - Knowledge Assistant - as a case study to demonstrate the power of ALHF.

Knowledge Assistant (KA) provides a declarative approach to create a chatbot over your documents, delivering high-quality, reliable responses with citations. KA leverages ALHF to continuously learn expert expectations from natural language feedback and improve the quality of its responses.

KA first asks for high-level task instructions. Once it’s connected to the relevant knowledge sources, it begins answering questions. Experts can then leverage an Improve Quality mode to review responses and leave feedback, which KA incorporates through ALHF to refine future answers.

Evaluation

To demonstrate the value of ALHF in KA, we evaluate KA using DocsQA – a dataset of questions and reference answers on Databricks documentation, part of our Domain Intelligence Benchmark. For this dataset, we also have a set of defined expert expectations. For a small set of candidate responses generated by KA, we create a piece of terse natural language feedback (like in the above example) based on these expectations and provide the feedback to KA to refine its responses. We then measure the response quality across multiple rounds of feedback to evaluate if KA successfully adapts to meet expert expectations.

Note that while the reference answers reflect factual correctness — whether an answer contains relevant and accurate information to address the question — they are not necessarily ideal in terms of aligning with expert expectations. As illustrated in our earlier example, the initial response may be factually correct for many flavors of SQL, but may still fall short if the expert expects a PostgreSQL-specific response.

Considering these two dimensions of correctness, we evaluate the quality of a response using two LLM judges:

- Answer Completeness: How well the response aligns with the reference response from the dataset. This serves as a baseline measure of factual correctness.

- Feedback Adherence: How well the response satisfies the specific expert expectations. This measures the agent’s ability to tailor its output based on personalized criteria.

Results

Figure 2 shows how KA improves in quality with increasing rounds of expert feedback on DocsQA. We report results for a held-out test set.

- Answer Completeness: Without feedback, KA already produces high-quality responses comparable with leading competing systems. With up to 32 pieces of feedback, KA’s Answer Completeness improves by 12 percentage points, clearly outperforming competitors.

- Feedback Adherence: The distinction between Feedback Adherence and Answer Completeness is evident – all systems start with low adherence scores without feedback. But here’s where ALHF shines: with feedback KA adherence score jumps from 11.7% to nearly 80%, showcasing the dramatic impact of ALHF.

Overall, ALHF is an effective mechanism for refining and adapting a system’s behavior to meet the specific expert expectations. In particular, it is highly sample-efficient: you don’t need hundreds or thousands of examples, but can see clear gains with a small amount of feedback.

ALHF: the technical challenge

These impressive results are possible because KA successfully addresses two core technical challenges of ALHF.

Learning When to Apply Feedback

When an expert gives feedback on one question, how does the agent know which future questions should benefit from that same insight? This is the challenge of scoping — determining the right scope of applicability for each piece of feedback. Or alternatively put, determining the relevance of a piece of feedback to a question.

Consider our PostgreSQL example. When the expert says "the answer should be compatible with PostgreSQL", this feedback shouldn't just fix that one response. It should inform all future SQL-related questions. But it shouldn't affect unrelated queries, like “Should I use matplotlib or seaborn for this chart?”

We adopt an agent memory approach that records all prior feedback and allows the agent to efficiently retrieve relevant feedback for a new question. This enables the agent to dynamically and holistically determine which insights are most relevant to the current question.

Adapting the Right System Components

The second challenge is assignment — figuring out which parts of the system need to change in response to feedback. KA isn't a single model; it's a multi-component pipeline that generates search queries, retrieves documents, and produces answers. Effective ALHF requires updating the right components in the right ways.

KA is designed with a set of LLM-powered components that are parameterized by feedback. Each component is a module that accepts relevant feedback and adapts its behavior accordingly. Taking the example from earlier, where the SME provides the following feedback on the date extraction example:

Later, the user asks a related question — “How do I get the difference between two dates in SQL?”. Without receiving any new feedback, KA automatically applies what it learned from the earlier interaction. It begins by modifying the search query in the retrieval stage, tailoring it to the context:

Then, it produces a PostgreSQL-specific response:

By precisely routing the feedback to the appropriate retrieval and response generation components, ALHF ensures that the agent learns and generalizes effectively from expert feedback.

What ALHF Means for You: Inside Agent Bricks

Agent Learning from Human Feedback (ALHF) represents a major step forward in enabling AI agents to truly understand and adapt to expert expectations. By enabling natural language feedback to incrementally shape an agent's behavior, ALHF provides a flexible, intuitive, and powerful mechanism for steering AI systems towards specific enterprise needs. Our case study with Knowledge Assistant demonstrates how ALHF can dramatically boost response quality and adherence to expert expectations, even with minimal feedback. As Patrick Vinton, Chief Technology Officer at Analytics8, a KA customer, said:

“Leveraging Agent Bricks, Analytics8 achieved a 40% increase in answer accuracy with 800% faster implementation times for our use cases, ranging from simple HR assistants to complex research assistants sitting on top of extremely technical, multimodal white papers and documentation. Post launch, we’ve also observed that answer quality continues to climb.”

ALHF is now a built-in capability within the Agent Bricks product, empowering Databricks customers to deploy highly customized enterprise AI solutions. We encourage all customers interested in leveraging the power of teachable AI to connect with their Databricks Account Teams and try KA and other Agent Bricks use cases to explore how ALHF can transform their generative AI workflows.

Veronica Lyu and Kartik Sreenivasan contributed equally

Authors: Veronica Lyu, Kartik Sreenivasan, Moonsoo Lee, Michael Bendersky, Alkis Polyzotis, Xiangrui Meng, Omar Khattab, Sam Havens, Michael Carbin and Matei Zaharia