Security & Trust Center

Your data security is our top priority

AI Security

Best practices for mitigating the risks associated with AI models

AI security refers to the practices, measures and strategies implemented to protect artificial intelligence systems, models and data from unauthorized access, manipulation or malicious activities. Organizations must implement robust security protocols, encryption methods, access controls and monitoring mechanisms to safeguard AI assets and mitigate potential risks associated with their use.

The Databricks Security team works with our customer base to deploy AI and machine learning (ML) on Databricks securely with the appropriate features that meet customers’ architecture requirements. We also work with dozens of experts internally at Databricks and in the larger ML and GenAI community to identify security risks to AI systems and define the controls necessary to mitigate those risks.

Understanding AI systems

What components make up an AI system and how do they work together?

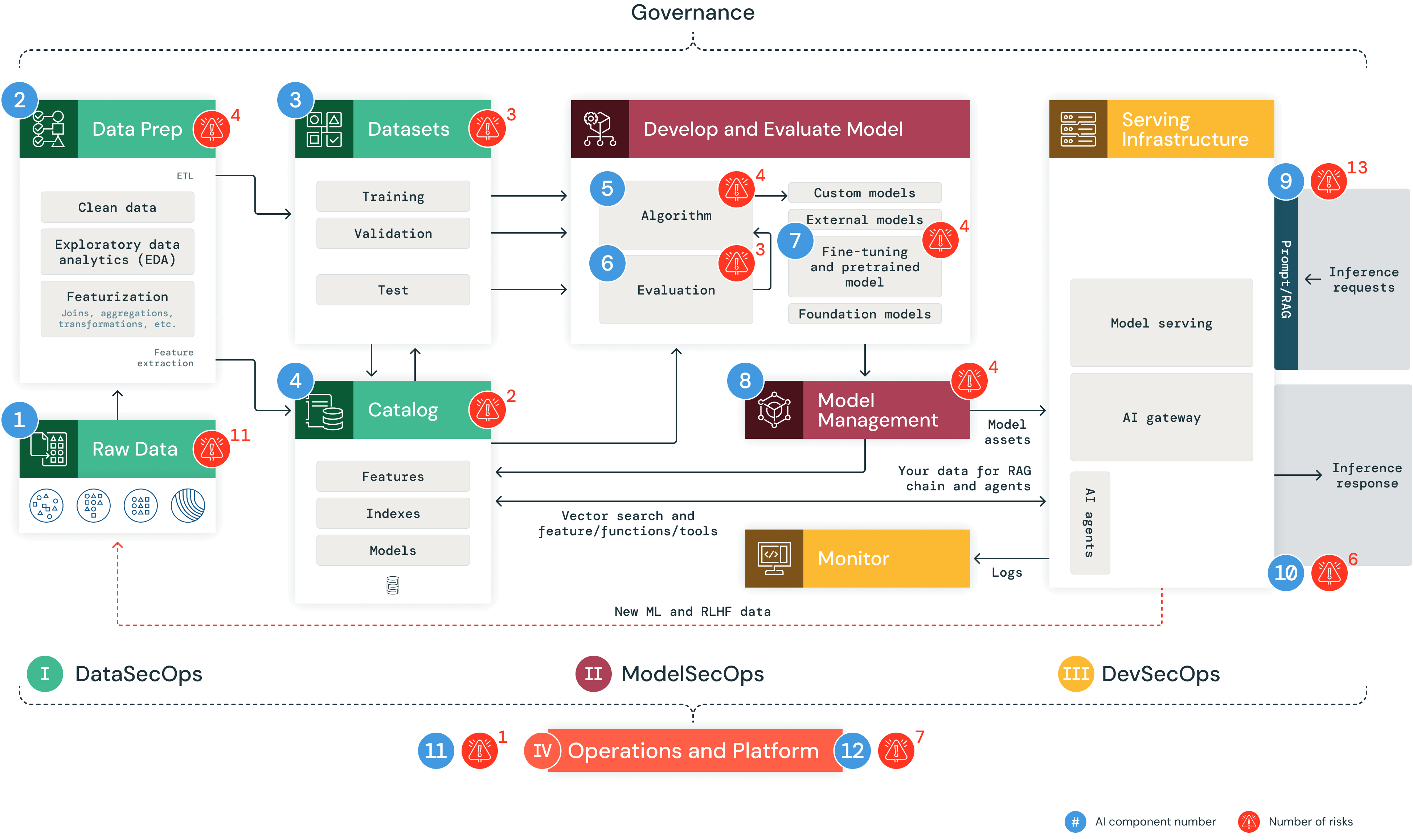

AI systems are composed of data, code and models. A typical end-to-end AI system has 12 foundational architecture components, broadly categorized into four major stages:

- Data operations include ingesting and transforming data and ensuring data security and governance. Good ML models depend on reliable data pipelines and secure infrastructure.

- Model operations include building custom models, acquiring models from a model marketplace or using software-as-a-service (SaaS) large language models (LLMs), such as OpenAI. Developing a model requires a series of experiments and a way to track and compare the conditions and results of those experiments.

- Model deployment and serving consists of securely building model images, isolating and securely serving models, automated scaling, rate limiting and monitoring deployed models.

- Operations and platform include platform vulnerability management and patching, model isolation and controls to the system and authorized access to models with security in the architecture. It also consists of operational tooling for CI/CD. It ensures the complete lifecycle meets the required standards by keeping the distinct execution environments — development, staging and production — secure for MLOps.

The below image highlights the 12 components and how they interact across an AI system.

Understanding AI security risks

What are the security threats that may arise when adopting AI?

In our analysis of AI systems, we identified 62 technical security risks across the 12 foundational architecture components. In the table below, we outline these basic components, which align with steps in any AI system, and highlight some examples of security risks. The full list of 62 technical security risks can be found in the Databricks AI Security Framework.

AI system stage | AI system components | Potential security risks |

|---|---|---|

Data operations | 1. Raw data 2. Data preparation 3. Datasets 4. Catalog and governance | 20 specific risks such as:

|

Model operations | 5. ML algorithm 6. Evaluation 7. Model build 8. Model management | 15 specific risks such as:

|

Model deployment and serving | 9. Model Serving — inference requests 10. Model Serving — inference responses | 19 specific risks such as:

|

Operations and platform | 11. ML operations 12. ML platform | 8 specific risks such as:

|

What controls are available for mitigating AI security risks?

There are 64 prescriptive controls for mitigating the identified 62 AI security risks. These controls include:

- Cybersecurity best practices such as single sign-on, encryption techniques, library and source code controls and network access controls with a defense-in-depth approach to managing risks

- Data and AI governance–specific controls such as data classification, data lineage, data versioning, model tracking, data and model asset permissions and model governance

- AI-specific controls like model serving isolation, prompt tools, auditing and monitoring models, MLOps and LLMOps, centralized LLM management, fine-tuning and pretraining your models

If you are interested in getting an in-depth overview of the security risks associated with AI systems and what controls should be implemented for each risk, we invite you to download our Databricks AI Security Whitepaper.

Best practices for securing AI and ML models

Data and security teams must actively collaborate to pursue their goal of improving AI systems’ security. Whether you are implementing traditional machine learning solutions or LLM-driven applications, Databricks recommends taking the following steps as outlined in the Databricks AI Security Whitepaper.

FAQs

AI Security Resources

Databricks AI Security Framework (DASF)

The Databricks Security team created the Databricks AI Security Framework (DASF) to address evolving vulnerabilities in AI systems with a holistic approach to mitigating risks beyond model security.

Databricks AI Risk Workshop

The Databricks Security team hosts AI risk workshops to help leaders understand AI systems, their risks and mitigation strategies.

AI Security Fundamentals Training

This course explores the fundamentals of security in AI systems within the Databricks Data Intelligence Platform in five comprehensive modules.

AI Security Events and Webinars

Databricks security leaders regularly share expertise at events with industry leaders, enterprises, agencies and vendors.