What’s New in Data Engineering and Streaming - January 2024

Databricks recently announced the Data Intelligence Platform, a natural evolution of the lakehouse architecture we pioneered. The idea of a Data Intelligence Platform is that of a single, unified platform that deeply understands an organization's unique data, empowering anyone to easily access the data they need and quickly build turnkey custom AI applications.

Every dashboard, app, and model built on a Data Intelligence Platform requires reliable data to function properly, and reliable data requires the best data engineering practices. Data engineering is core to everything we do – Databricks has empowered data engineers with best practices for years through Spark, Delta Lake, Workflows, Delta Live Tables, and newer Gen AI features like Databricks Assistant.

In the Age of AI, Data Engineering best practices become even more mission critical. So while we are committed to democratizing access to the explicitly Generative AI functionality that will continue unlocking the intelligence of the Data Intelligence Platform, we are just as committed to innovating at our core through foundational data engineering offerings. After all, nearly 3/4 of executives believe unreliable data is the single biggest threat to the success of their AI initiatives.

At Data + AI Summit in June 2023, we announced a slate of new feature launches within the Data Engineering and Streaming portfolio, and we're pleased to recap several new developments in the portfolio from the months since then. Here they are:

Analyst Recognition

Databricks was recently named a leader in The Forrester Wave™: Cloud Data Pipelines, Q4 2023. In Forrester's 26-criterion evaluation of cloud data pipeline providers, they identified the most significant ones and researched, analyzed, and scored them. This report shows how each provider measures up and helps data management professionals select the right one for their needs.

You can read this report, including Forrester's take on major vendors' current offering(s), strategy, and market presence, here.

We're proud of this recognition and placement, and we believe the Databricks Data Intelligence Platform is the best place to build data pipelines, with your data, for all your AI and analytics initiatives. In executing on this vision, here are a number of new features and functionality from the last 6 months.

Data Ingestion

In November we announced our acquisition of Arcion, a leading provider for real-time data replication technologies. Arcion's capabilities will enable Databricks to provide native solutions to replicate and ingest data from various databases and SaaS applications, enabling customers to focus on the real work of creating value and AI-driven insights from their data.

Arcion's code-optional, low-maintenance Change Data Capture (CDC) technology will help to power new Databricks platform capabilities that enable downstream analytics, streaming, and AI use cases through native connectors to enterprise database systems such as Oracle, SQL Server and SAP, as well as SaaS applications such as Salesforce and Workday.

Spark Structured Streaming

Spark Structured Streaming is the best engine for stream processing, and Databricks is the best place to run your Spark workloads. The following improvements to Databricks streaming, built on the Spark Structured Streaming engine, expand on years of streaming innovation to empower use cases like real-time analytics, real-time AI + ML, and real-time operational applications.

Apache Pulsar support

Apache Pulsar is an all-in-one messaging and streaming platform and a top 10 Apache Software Foundation project.

One of the tenets of Project Lightspeed is ecosystem expansion - being able to connect to a variety of popular streaming sources. In addition to preexisting support for sources like Kafka, Kinesis, Event Hubs, Azure Synapse and Google Pub/Sub, in Databricks Runtime 14.1 and above, you can use Structured Streaming to stream data from Apache Pulsar on Databricks. As it does with these other messaging/streaming sources, Structured Streaming provides exactly-once processing semantics for data read from Pulsar sources.

You can see all the sources here, and the documentation on the Pulsar connector specifically here.

UC View as streaming source

Gartner®: Databricks Cloud Database Leader

In Databricks Runtime 14.1 and above, you can use Structured Streaming to perform streaming reads from views registered with Unity Catalog. See the documentation for more information.

AAD auth support

Databricks now supports Azure Active Directory authentication - a required authentication protocol for many organizations - for using the Databricks Kafka connector with Azure Event Hubs.

Delta Live Tables

Delta Live Tables (DLT) is a declarative ETL framework for the Databricks Data Intelligence Platform that helps cost-effectively simplify streaming and batch ETL. Creating data pipelines with DLT allows users to simply define the transformations to perform – task orchestration, cluster management, monitoring, data quality and error handling are automatically taken care of.

Append Flows API

The append_flow API allows for the writing of multiple streams to a single streaming table in a pipeline (for example, from multiple Kafka topics). This allows users to:

- Add and remove streaming sources that append data to an existing streaming table without requiring a full refresh; and

- Update a streaming table by appending missing historical data (backfilling).

See the documentation for exact syntax and examples here.

UC Integration

Unity Catalog is the key to unified governance on the Data Intelligence Platform across all your data and AI assets, including DLT pipelines. There have been several recent improvements to the Delta Live Tables + Unity Catalog Integration. The following are now supported:

- Writing tables to Unity Catalog schemas with custom storage locations

- Cloning DLT pipelines from the Hive Metastore to Unity Catalog, making it easier and quicker to migrate HMS pipelines to Unity Catalog

You can review the full documentation of what is supported here.

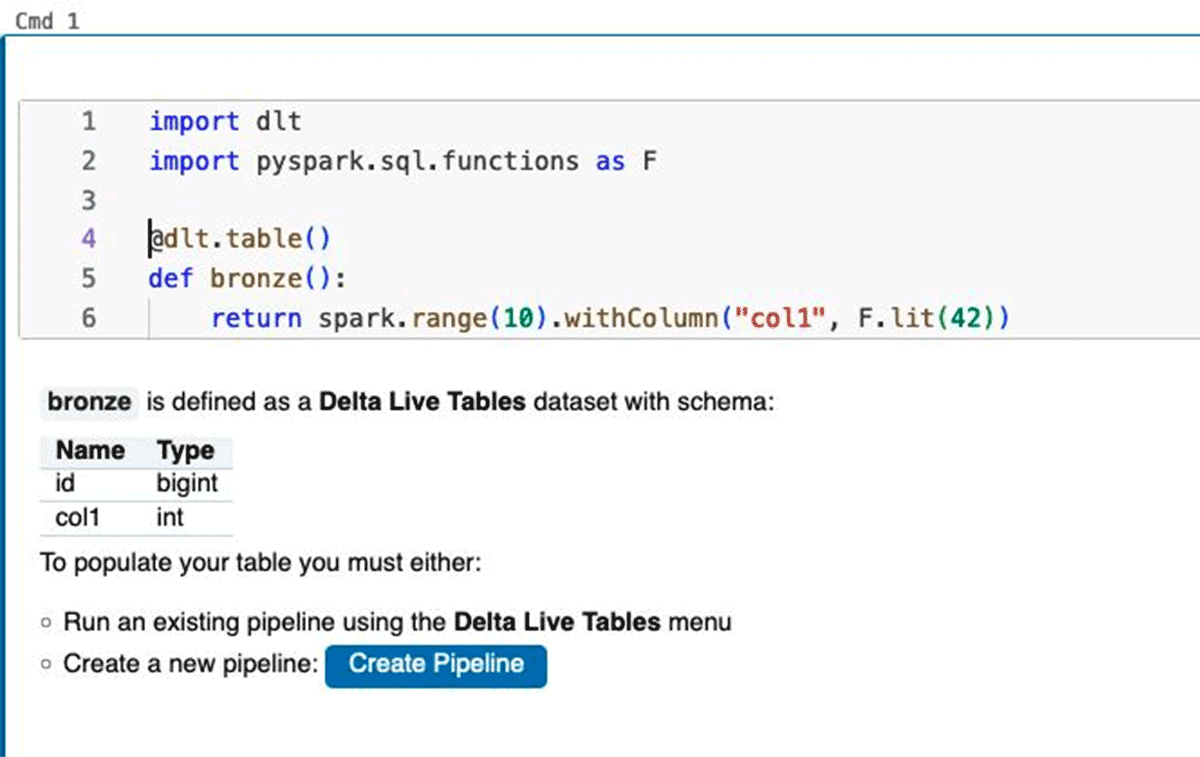

Developer Experience + Notebook Improvements

Using the interactive cluster with Databricks Runtime 13.3 and up, users can now catch syntax and analysis errors in their notebook code early, by executing individual notebook cells defining DLT code in a regular DBR cluster - without having to actually process any data. This allows issues like syntax errors, wrong table names or wrong column names to be caught early - in the development process - without actually having to execute a pipeline run. Users are also able to incrementally build and resolve the schemas for DLT datasets across notebook cell executions.

Google Cloud GA

DLT pipelines are now generally available on Google Cloud, empowering data engineers to build reliable, streaming and batch data pipelines effortlessly across GCP cloud environments. By expanding the availability of DLT pipelines to Google Cloud, Databricks reinforces its commitment to providing a partner-friendly ecosystem while offering customers the flexibility to choose the cloud platform that best suits their needs. In conjunction with the Pub/Sub streaming connector, we're making it easier to work with streaming data pipelines on Google Cloud.

Databricks Workflows

Enhanced Control Flow

An important part of data orchestration is control flow - the management of task dependencies and control of how those tasks are executed. This is why we've made some important additions to control flow capabilities in Databricks Workflows including the introduction of conditional execution of tasks and of job parameters. Conditional execution of tasks refers to users' ability to add conditions that affect the way a workflow executes by adding "if/else" logic to a workflow and defining more sophisticated multi-task dependencies. Job parameters allow you to define key/value pairs that are made available to every task in a workflow and can help control the way a workflow runs by adding more granular configurations. You can learn more about these new capabilities in this blog.

Modular Orchestration

As our customers build more complex workflows with larger numbers of tasks and dependencies, it becomes increasingly harder to maintain, test, and monitor these workflows. This is why we've added modular orchestration to Databricks Workflows - the ability to break down complex workflows into "child jobs" that are invoked as tasks within a higher level "parent job". This capability lets organizations break down DAGs to be owned by different teams, introduce reusability of workflows, and by so faster development and more reliable operations for orchestrating large workflows.

Summary of Recent Innovation

This concludes the summary of features we've launched in the Data Engineering and Streaming portfolio since Data + AI Summit 2023. Below is a full inventory of launches we've blogged about over the last year - since the beginning of 2023:

- November 2023: Enhanced Control Flow in Databricks Workflows

- September 2023: Orchestrating Data Analytics with Databricks Workflows

- August 2023: Delta Live Tables GA on Google Cloud

- August 2023: Multiple Stateful Operators in Structured Streaming

- August 2023: Modular Orchestration with Databricks Workflows

- July 2023: New Monitoring and Alerting Capabilities in Databricks Workflows

- June 2023: What's New in Data Engineering - DAIS 2023

- June 2023: Project Lightspeed 1-Year Update

- June 2023: Materialized Views and Streaming Tables in DB SQL

- June 2023: Delta Live Tables + Unity Catalog Integration

- June 2023: Google Pub/Sub Connector

- June 2023: Adaptive Query Execution in Spark Structured Streaming

- May 2023: Low-Code Ingestion via UI

- May 2023: Subsecond Latency in Spark Structured Streaming

- April 2023: Recent Workflows Updates

- February 2023: Orchestrating dbt Projects with Databricks Workflows

- January 2023: New Workflows Notifications

You can also stay tuned to the quarterly roadmap webinars to learn what's on the horizon for the Data Engineering portfolio. It's an exciting time to be working with data, and we're excited to partner with Data Engineers, Analysts, ML Engineers, Analytics Engineers, and more to democratize data and AI within your organizations!